Taming AWS re:Invent 2020 sessions with AWS Glue DataBrew

9 min read, last updated on 2021-01-31

Motivation

AWS re:Invent 2020 was even crazier than previous ones. Mostly because of the insane amount of talks available for the participants. There were so many of them and based just on the abstract and title, it was tough to choose. Also, time overlap and different time zones (even that each talk had 3 other time slots for different geographic areas) did not help.

I did my best, and during December 2020 and January 2021 (yes, we had an additional week in January 😅), I have watched 155 talks. For all of them, I made notes. In many cases, I have learned a lot, but sometimes I was disappointed too. That’s why I wanted to share the best ones and prepared a blog post. However, it was not an easy task - you need to consider that we are talking about more than 800 different sessions, times 3 slots for different time zones and foreign languages.

Luckily, there is a helping hand available!

AWS Glue DataBrew for the rescue!

How did I prepare that then?

Well, as a part of pre-re:Invent, AWS announced that a new service called DataBrew joins the AWS Glue family. It is a unique visual data preparation tool that makes it easy for data analysts and data scientists to clean and normalize data. That sounds like a tool I need for that use case!

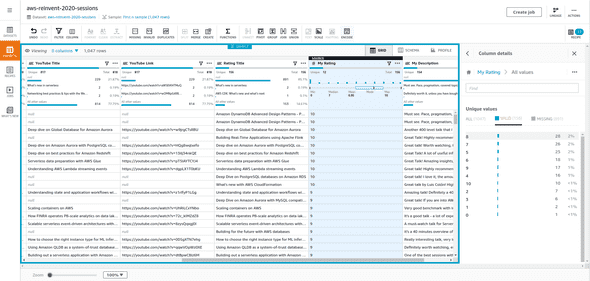

As you can see on the image above, I am not so merciful about the rating (assuming that everything above 7 is worth your time 😉). However, to get such results, we need data.

Data Sources

For this assignment, I had to utilize 3 different sources.

Notes about 155 AWS re:Invent 2020 talks in Roam Research

The first source is prepared from my notes. As the conference started, I made notes in my favorite note-taking tool Roam Research. Thankfully I started with some structure in mind, but I ended up having 155 pages with notes and ratings - one per talk. One of the most exciting capabilities of Roam Research is its querying engine, which allows us to ask questions and get answers from our pile of notes. It misses some features, but it’s a web application, so with the small help of JavaScript I have extracted a CSV data set with title, rating, and a short text about my feelings about this presentation.

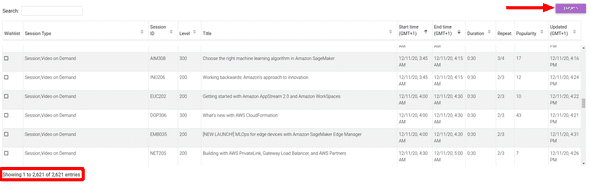

List of all sessions downloaded from Cloud Pegboard

Now I need a complete list of talks that happened during AWS re:Invent 2020. The official website is cumbersome to crawl, but luckily there is a fantastic project called Cloud Pegboard by Ken Robbins which allows downloading all sessions in a CSV file. The most important columns for us are Session ID and Title. So far, so good - 2/3 sources collected, now it’s time for YouTube links!

YouTube links to all sessions

So this could be a little bit easier if AWS left those videos in the official conference portal. However, all videos (SPOILER ALERT: not all yet) were uploaded to YouTube, and access to the conference portal is blocked.

So we need to connect somehow a YouTube link with our entries. There is a considerable probability that this will be even more comfortable in the future thanks to Cloud Pegboard, but right now, there are no links to YouTube sessions for AWS re:Invent 2020.

We need to be a little bit smarter: you can go to the official AWS Events YouTube channel and harvest the list of the videos via API or just by crawling/extracting it via JavaScript. I did the latter and obtained the 3rd CSV file with the title of the talk and YouTube link.

When we have those 3 files on disk, it’s time to use AWS Glue DataBrew.

Cleaning and extracting value from that data!

AWS Glue DataBrew introduces a few new terms:

- Dataset: it is a logical representation of the data collected inside Amazon S3 Buckets, Amazon Redshift tables, Amazon RDS tables, or from the metadata stored inside AWS Glue Data Catalog. You can upload files (e.g., CSV, Parquet, JSON, or XLSX) directly to the DataBrew, and that will automatically pre-process them into a table ready to operate.

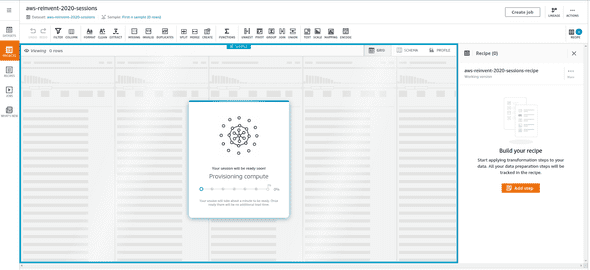

- Project: this is our workspace for exploration and analysis. It provides very nice capabilities, which include insights and various statistics about our data. It feels like a more specialized browser-based spreadsheet user interface for manipulating, cleaning, and transforming data. Additionally, each active session of such a project is our unit of billing.

- Recipe: it is a set of operations that we are applying on a data set inside a project, but we can also reuse them between those. The recipe can be saved as a YAML and JSON definition.

- Job: each recipe can be run without a project in an automated way, based on a prepared schedule. This helps to industrialize the prepared operations during the exploration step.

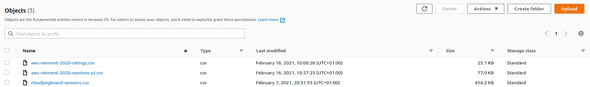

In our case, we have created 3 data sets by uploading files to the AWS Glue DataBrew user interface. They are landing on the Amazon S3 and looks as follows:

Then we start building our recipe. First, we have removed unnecessary columns, then we have removed duplicated rows, cleaned the Title column, then we have joined 3 data sets via Title of the talk, which as a result required us to rename columns as we can’t have duplicated names of the columns.

You can lookup below the final recipe, which contains all operations (after exporting it to YAML):

- Action:

Operation: REMOVE_VALUES

Parameters:

sourceColumn: Session Type

ConditionExpressions:

- Condition: IS_NOT

Value: >-

[

"Session,Video on Demand",

"Session",

"Keynote,Video on Demand",

"Leadership Session,Session"

]

TargetColumn: Session Type

- Action:

Operation: DELETE

Parameters:

sourceColumns: >-

[

"Wishlist",

"Start time (GMT+1)",

"End time (GMT+1)",

"Duration",

"Repeat",

"Popularity",

"Updated (GMT+1)",

"Level"

]

- Action:

Operation: DELETE_DUPLICATE_ROWS

Parameters:

duplicateRowsCount: '1555'

- Action:

Operation: REMOVE_COMBINED

Parameters:

collapseConsecutiveWhitespace: 'false'

customValue: '[NEW LAUNCH!]'

removeAllPunctuation: 'false'

removeAllQuotes: 'false'

removeAllWhitespace: 'false'

removeCustomCharacters: 'false'

removeCustomValue: 'true'

removeLeadingAndTrailingPunctuation: 'false'

removeLeadingAndTrailingQuotes: 'false'

removeLeadingAndTrailingWhitespace: 'true'

removeLetters: 'false'

removeNumbers: 'false'

removeSpecialCharacters: 'false'

sourceColumn: Title

- Action:

Operation: REMOVE_COMBINED

Parameters:

collapseConsecutiveWhitespace: 'false'

customValue: '[New Launch!]'

removeAllPunctuation: 'false'

removeAllQuotes: 'false'

removeAllWhitespace: 'false'

removeCustomCharacters: 'false'

removeCustomValue: 'true'

removeLeadingAndTrailingPunctuation: 'false'

removeLeadingAndTrailingQuotes: 'false'

removeLeadingAndTrailingWhitespace: 'false'

removeLetters: 'false'

removeNumbers: 'false'

removeSpecialCharacters: 'false'

sourceColumn: Title

- Action:

Operation: JOIN

Parameters:

joinKeys: '[{"key":"Title","value":"title"}]'

joinType: OUTER_JOIN

leftColumns: '["Session Type","Session ID","Title"]'

rightColumns: '["title","link"]'

secondInputLocation: >-

s3://{BUCKET}/datasets/aws-reinvent-2020-sessions-yt.csv

secondaryDatasetName: aws-reinvent-2020-sessions-yt

- Action:

Operation: RENAME

Parameters:

sourceColumn: title

targetColumn: YouTube Title

- Action:

Operation: RENAME

Parameters:

sourceColumn: link

targetColumn: YouTube Link

- Action:

Operation: JOIN

Parameters:

joinKeys: '[{"key":"Title","value":"title"}]'

joinType: OUTER_JOIN

leftColumns: >-

'["Session Type","Session ID","Title",

"YouTube Title","YouTube Link"]'

rightColumns: >-

'["title","rating","description"]'

secondInputLocation: >-

's3://{BUCKET}/datasets/aws-reinvent-2020-ratings.csv'

secondaryDatasetName: aws-reinvent-2020-ratings

- Action:

Operation: RENAME

Parameters:

sourceColumn: title

targetColumn: Rating Title

- Action:

Operation: RENAME

Parameters:

sourceColumn: rating

targetColumn: My Rating

- Action:

Operation: RENAME

Parameters:

sourceColumn: description

targetColumn: My DescriptionYou can download (or save on Amazon S3) the cleaned-up data set as a final result. You can also export your recipe or turn it into a job that will run on-demand or periodically based on the recipe we have prepared and explored.

In my case, the final data set has 180 KB:

My thoughts about AWS Glue DataBrew

The overall experience is surprisingly pleasant (which is not always the case with AWS User Interfaces). The tool is very intuitive in most cases, but there are some rough edges here and there. The usual operations like joining, cleaning, taking care of data quality in the columns are straightforward and self-explanatory. The full view completes with such small details as Data Lineage visualization.

In terms of those small annoyances, I can name a few:

- Sampling is applied per project, which initially makes sense, but it’s hard to adjust later in the process.

- If you want to filter your data with multiple conditions with a combination of

OR,AND,NOTacross different operations, you can’t do that at the moment. - Removing duplicates from the data set requires removing columns; there is no way to specify either ignoring columns or selecting just a subset of columns that will be treated as a key for determining duplication.

There is one missing piece that I noted during the work on that project. The first one is the lack of the User Defined Functions. The set of the available operations is impressive, but I wanted to filter out data based on the language, and I could not do that. However, a very simple AWS Lambda with a call to Amazon Transcribe would solve it. However, at the moment, it’s not possible.

Last but not least: how much does it cost?

You are paying for the time spent in the total number of sessions, where each session costs $1. A session is initiated when you open a DataBrew project, and each session is 30 minutes. It is worth mentioning that the first 40 interactive sessions are free for first-time users. You can monitor the SessionCount via Amazon CloudWatch, and that will give you at least an approximation regarding the costs.

Additional costs are related to the jobs. If you are executing your recipes as jobs, you pay $0.48 per node hour, where one node is 4 vCPU and 16 GB of RAM. Jobs have a 1-minute billing duration, and by default AWS Glue DataBrew allocates 5 nodes for each job. Hopefully, I do not have to explain that, but all underneath interactions like data transfer, Amazon S3 requests, storage, etc., are paid separately.

In my case, it cost $0 because I did not use jobs, and I spent no more than 3 sessions total on that small project.

Summary

I hope you liked this, as much as I enjoyed working with AWS Glue DataBrew. However, this blog post is just half of the story. For some of you, more important than a technical explanation of how I achieved that is the curated list of the recommended AWS re:Invent 2020 sessions I collected here. Enjoy!

If you are looking for my opinion about the most exciting announcements from the AWS re:Invent 2020, I have prepared such an article here.

That’s definitely not the last when I worked with AWS Glue DataBrew, and I will eagerly observe its evolution. I feel that this service and relatively fresh AWS Glue Studio can be a fresh start for the whole AWS Glue family - and I am looking forward to it! 💪