My Personal Top 5 Announcements from AWS re:Invent 2020

9 min read, last updated on 2021-02-18

Introduction

AWS re:Invent 2020 was … different. Usually, it is a week-long event in Las Vegas that focuses on learning and play, where announcements look like an excuse to organize such a conference. However, in 2020 nothing can be the same as in previous years.

The past event was a very intensive conference with a significant emphasis on learning. More than 800 talks (from which I have reviewed 155 and collected a subjective list of the best ones here), a lot of announcements, and everything organized virtually and remotely.

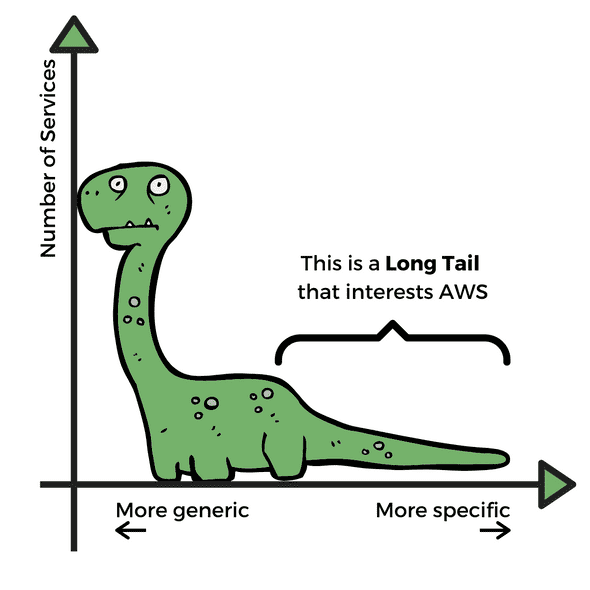

When it comes to most new services, it’s hard to resist the feeling that those are focused on long-tail use cases. Those of you who have not heard about long-tail economics - it’s the strategy well-known for Amazon. It is defined by marketing’s ability to reach a small but highly engaged and passionate target market or customer segment with a customized product or service.

After this year’s revelations, it feels like AWS focused on monetizing Amazon experience from domains like supply chain, logistics and delivery, internet of things (IoT).

You don’t believe me, right? Have a look at the list: AWS Panorama, Amazon Lookout for Equipment, AWS Monitron … and that’s just a tip of an iceberg. This trend is even more visible if you will look for the announcements since 2018 where they’ve released services for such niches like robotics or AR / VR.

However, that’s not the end: closing the gap in various niches where they are already leaders, but they do not provide the most comprehensive portfolio. The best example is how many services joined the Amazon SageMaker portfolio during the last conference. Thanks to that, Amazon SageMaker as an umbrella service provides the most expansive option of services that are covering end-to-end the machine learning (ML) development lifecycle.

Speaking about differences and the influence of the mothership (Amazon): it was the last AWS re:Invent conference when Andy Jassy conducted a keynote, because in Q3 2021 he will supersede Jeff Bezos as a CEO of Amazon (source).

Nevertheless, at least one thing did not change: AWS released many exciting updates and new options. And obviously, I have my favorites.

My Personal Top 5 Announcements

Why those?

Before I present my top five, I would like to explain myself from two things. I am AWS Maniac, but as we speak about my personal favorites, my preferences are skewed into the topics related to data processing, data analytics, and databases.

Moreover, I am also including in that list pre-re:Invent announcements (released just before the conference). I do this because those services and updates are also excellent from my perspective, and I have selected some of them that are worth noticing.

Additionally, I have tried to base this list on practice as much as possible. Of course, in some cases, it won’t be possible because those services are either announced as a preview or coming soon - but there are some ways to check them (one of the reasons why it is worth being an AWS Community Builder program, which will open again in Q1 2021), or at least learn more about it from the re:Invent talks.

So, let’s review those announcements together! Starting from the fifth place, which is occupied by …

Number 5: Five important updates for Amazon S3

Amazon S3 is a synonym of AWS cloud success. Released in 2006, it quickly becomes a de-facto standard and reference for a fully-managed cloud storage system. It is surprising how deeply it is ingrained in so many use cases. Does it mean that it’s stable and AWS just reaps the benefits?

AWS re:Invent 2020 shown that such a statement is very far from the truth.

- Amazon DynamoDB import/export from/to Amazon S3 bucket.

- Amazon S3 Storage Lens.

- Replication into multiple destionation buckets.

- Amazon S3 Bucket Keys.

- Read-after-write consistency for all operations.

From those 5 updates, 3 solve minor or major annoyances, but the last 2 are tremendously important!

First, I would compare releasing the Amazon S3 Bucket Keys to the already praised gp3 Amazon EBS volumes - it’s a game-changer from the cost-efficiency perspective.

Finally, I want to emphasize how important and hard it is to achieve read-after-write consistency for all operations. I have written about it in my blog post about TLA+ usage in AWS. What it brings, besides removing mildly infuriating annoyances with handling certain operations like updates? A plethora of use cases you can now design differently outside AWS and inside - to recall just one: I am sure that it will provide us soon delivery and time guarantees for Amazon S3 Event Notifications.

Number 4: AWS Glue Elastic Views

This service has an exciting value proposition: it is all about combining and replicating data across multiple data stores to create multiple materialized views queryable with PartiQL without having to write a custom code.

It sounds great on paper and in the marketing materials - however, there are two big “buts”. The first one is about service availability because it’s still in Preview. The second one is about limitations when it comes to the data sources and data targets. Looks like the only source of knowledge about this service is the AWS re:Invent 2020 talk, which is one of the recommended talks from my blog post. Still, I think this service will be an exciting addition to AWS Glue family in the future.

Number 3: AWS Glue DataBrew

This is a pre-re:Invent announcement, but definitely worth mentioning. It is a new visual data preparation tool that helps you clean and normalizes data without writing code.

In the beginning, I was really skeptical about this service. I preferred to get a more systemical update to resolve this messy situation between AWS Glue 1.0 and 2.0. Instead, we got another GUI. The truth is that its purpose is different, and the tool itself provides exciting capabilities.

If you want to learn how to use AWS Glue DataBrew in practice, check out my blog post how I used that to pre-process and clean data about AWS re:Invent 2020 talks for another blog post.

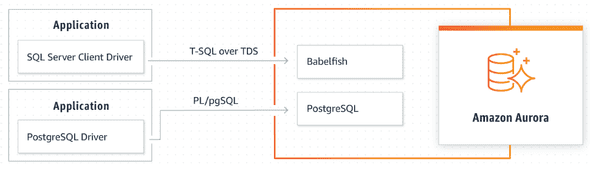

Number 2: Babelfish for Amazon Aurora with PostgreSQL compatibility

Another service that is still in a preview which tries to solve a very ambitious problem. Its tagline about “running Microsoft SQL Server (including compatibility with T-SQL) applications on PostgreSQL with little to no code change” sounds like snake oil. However, for people dealing with Microsoft SQL Server licensing issues or legacy software that cannot be easily rewritten, it promises “salvation”.

I wanted to verify that. It turns out that the official and recommended talk from AWS re:Invent 2020 tones down the marketing lines and sets expectations on a well-prepared level. I can definitely recommend this talk to understand how it will work, and try to think about how this will be incorporated into PostgreSQL because it’s not a trivial thing - yet it’s totally possible (hint: you can always fork the source code).

Nevertheless, I am not surprised that Babelfish will be an open-source software. Maintaining a compatibility layer, including quirks and specifics between SQL dialects, sounds like a non-trivial challenge to manage from a long-term perspective. However, the premise is excellent, and personally, I am really counting on that particular release.

Honorable Mentions

Amazon Redshift updates

This service is another example of the success of AWS cloud. However, competitors are pushing back very hard from so many different directions (like Snowflake or Databricks Lakehouse Architecture).

That’s why AWS does not stop and continuously tries to evolve the Amazon Redshift platform to be competitive. They have released several bigger and smaller updates during AWS re:Invent 2020. Here is the complete list:

- Amazon Redshift ML which is still in Preview, but looks very interesting.

- Advanced Query Accelerator (AQUA), again - in Preview.

- Support for JSON and semi-structured data (

SUPER). - Finally, we can move clusters between AZs.

- Data Sharing, which is in Preview, but allows for exciting capabilities to access data without copy/move data (unfortunately only for

ra3family 😢). - We received new instance type:

ra3.xlplus. - Now we have the ability to federate queries with MySQL (Amazon RDS and Amazon Aurora for now, and in Preview).

- We have a new helper related to capacity planning and maintenance: Automatic Table Optimization.

Increasing data retention to 1 year for Amazon Kinesis Data Streams

This is pretty self-explanatory announcement, but I would like to emphasize the jump. AWS increased that from 7 days to one year, which required a different approach to the infrastructure and rearchitecting one of tier 1 (in other words: core) services.

Amazon Neptune ML

I must admit that Amazon Neptune is one of my favorite services, but this announcement made it, even cooler, by introducing Graph Neural Network (GNN) via Amazon SageMaker and Deep Graph Library (DGL).

Number 1: Amazon Managed Workflows for Apache Airflow (MWAA)

I have prepared a separate article with my honest review of that service, and still knowing that and being perfectly aware of how many rough edges this service has at the moment, I am in the same way excited that it arrived.

First things first: Apache Airflow becomes a state of the art workflow management platform. You can disagree with that, but for so many data teams combining it with Kubernetes arose to the rank of a data infrastructure design pattern. However, at the same time Apache Airflow is very pesky to manage and operate reliably.

In that sense AWS did what they do the best very consistently: they’ve monetized their operational knowledge by providing a fully-managed service. And that’s great because it offers more savings in other areas for their customers.

The most significant selling point here is that you have the same Apache Airflow as the others have, in a fully-managed form, with well-known plugins that are fully compatible and full integration with AWS portfolio (but it’s not perfect yet). As a person who worked with Amazon Data Pipeline, AWS Glue Workflows, and AWS Step Functions, I am thrilled that we received an alternative that is fully compatible with an open source version - because that removed another point from the list of contraindications related to diving deeper into the cloud. It requires some work (and release of Apache Airflow 2.0 😉), but it already gives an interesting value proposition worth considering.

Summary

That’s it from my side! 😅

Do you agree with the list I provided? What are your top 5 services? Or maybe you have some other observations about the newly released services and their trends? I would love to talk with you about that, so please share your preferences and comments below!